Multi-Modal AI: Combining Text, Voice, and Vision for Smarter Systems

What if your devices could see, hear, and understand the world like you do? Picture a smart assistant that reads your emails, identifies objects in a room, and responds to your voice seamlessly. This is the world of multi‑modal AI, where text, voice, and vision join forces to create systems that think and interact like humans. Businesses and organizations are turning to AI development services to bring these smarter systems to life, combining cutting-edge machine learning, voice AI, and computer vision. By merging these capabilities, AI delivers faster insights, smarter decisions, and experiences that feel truly intuitive. With rapid advances in these technologies, multi‑modal AI is no longer the future, it’s happening now.

What is Multi Modal AI?

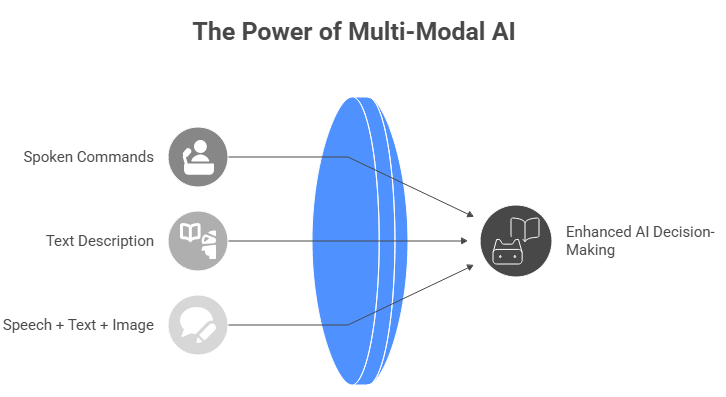

Multi‑modal AI refers to systems that process different types of data text, audio (voice), images, or video together. Rather than just using one data type (like a text‑only chatbot), multi‑modal systems combine modalities so they can interpret richer input, such as:

- Spoken commands + visual scene (voice + vision)

- Text description + image recognition (text + computer vision)

- Speech + text + image for complex tasks

This fusion lets AI make decisions, fetch insights, or trigger actions based on multiple data streams. For instance, a system could voice‑recognize a user’s question, glance at an image (or camera feed), and compose a helpful answer.

Market Trends: Why Everyone’s Betting on It

The momentum behind multi‑modal AI is huge.

- The global AI market fueled by machine learning, computer vision, NLP (text/voice) is soaring. In 2024 it was valued around US$ 233.46 billion, and by 2032 is projected to reach over US$ 1.7 trillion.

- The computer vision segment alone hit US$ 28.2 billion in 2025 and keeps climbing.

- The voice‑AI (voice assistants, speech recognition, etc.) market reached roughly US$ 10.1 billion in 2025.

These numbers matter: combining these modalities unlocks greater value. A system with only voice or only vision already has use but when you combine modalities, you get smarter systems that outperform single‑mode ones.

Also, enterprise adoption is accelerating. Many companies that once deployed text‑based AI are now exploring or integrating voice + vision capabilities via multi‑modal tools.

Real World Use Cases of Multi Modal AI

Virtual Assistants & Smart Devices

Voice AI already powers smart speakers, virtual assistants, and customer support bots. When equipped with visual awareness e.g. ability to see objects or read text they can do much more. Picture a home assistant that sees your shelf, identifies items via camera, and tells you what’s missing or an enterprise tool that reads scanned documents (vision) while summarizing via text or voice.

Automated Security & Monitoring

Computer vision tools catch anomalies in camera footage. Add voice detection (e.g. raised voices, certain sounds) and text analysis (e.g. scene metadata, logs), and you have smarter surveillance systems. That helps in manufacturing, public safety, retail loss prevention, and more.

Accessibility & Assistive Tech

Multi‑modal AI can help people with disabilities. For example: voice commands + image recognition to help visually impaired users navigate environments, or speech‑to‑text + object detection + guidance. These combined capabilities enhance autonomy and safety.

Customer Service & Retail

Retailers can combine voice‑AI chatbots (for customer interaction), text‑AI (for summarizing orders or extracting data), and computer vision (for recognizing products, verifying ID, or processing returns). This leads to faster processing, fewer errors, and better user experience.

Enhanced Content Creation & Media

Content production pipelines increasingly use generative AI: text generation, voice synthesis, image or video creation. Multi‑modal generative AI merging text, audio, and visuals enables content like narrated video, image‑driven stories, or dynamic presentations. This shifts creative workflows.

Challenges & Considerations

Building multi‑modal AI isn’t trivial.

- Data requirements: You often need aligned data i.e. the same event captured in text, audio, image. Gathering such datasets can be costly or impractical.

- Complex modeling: Combining modalities demands advanced architectures. Recent research (e.g. via contrastive pre‑training) helps, but engineering remains harder than single‑mode AI.

- Performance and latency: Real‑time voice + vision + decision logic can tax computational resources. This matters for devices, mobile apps, or on‑edge deployments.

- Privacy & security: Dealing with voice, video, and textual data raises privacy concerns. Systems must ensure secure storage, proper consent, and anonymization when needed.

- Integration complexity: For many organizations, integrating text‑AI, voice‑AI, and computer vision into existing systems legacy databases, workflows, compliance is non‑trivial.

Best Practices: How to Integrate Multi Modal AI Tools

When a team plans to adopt multi‑modal AI, here are recommended steps:

- Start with a clear problem statement: Choose use cases where combined modalities add real value (e.g. automated inspection + alert + voice feedback).

- Leverage existing AI systems: Use well-tested text‑AI (NLP), voice‑AI libraries or services, and computer vision frameworks. Combining them reduces risk compared to building from scratch.

- Ensure high‑quality data pipelines: Collect or source clean, aligned datasets. Use annotation tools, data auditing, and tagging for different modalities.

- Employ incremental integration & code review: Use formal AI Code Review to manage quality, safety, and compliance. Add one modality at a time, test thoroughly, and monitor performance.

- Build scalable & modular architecture: Design the system so you can add or update modalities as needed. Use microservices or modular AI‑tool integrations.

- Focus on user experience: For systems with end‑users (e.g. voice + visual assistants), design clear UX flows. Multi‑modal AI shines when it feels seamless and intuitive.

Why Forward Looking Organizations Should Embrace Multi Modal AI

As AI adoption spreads across industries, multi‑modal AI is increasingly becoming the standard for smarter systems. With the global AI market expanding rapidly and voice, vision, and text technologies growing in tandem waiting too long to adopt may leave you behind.

Organizations doing the most with AI in 2025 are those that combine modalities thoughtfully. They build tools that go beyond narrow tasks creating systems more flexible, more human-like, and more effective.

For governments, public organizations, and enterprises, multi‑modal AI offers new frontiers: better citizen services, efficient document analysis, smarter automation, enhanced accessibility, and improved transparency.

Final Thoughts

Multi‑modal AI the merging of text, voice, and vision represents the next major leap in building smarter systems. By enabling machines to understand and react across multiple data types, we unlock richer interactions and more powerful automation. As the AI landscape rapidly evolves, organizations that plan carefully and integrate responsibly stand to gain the most.

At App Maisters, we specialize in helping public‑sector and enterprise teams implement advanced AI solutions. We guide clients from initial planning through data strategy, AI Code Review, AI tool integration, and scalable deployment ensuring multi‑modal AI becomes a robust, effective part of your digital transformation journey.

FAQs

What is multi-modal AI and how does it work?

Multi-modal AI combines text, voice, and visual data to create smarter, human-like systems. It processes multiple inputs at once to deliver richer insights and faster decisions. App Maisters helps organizations build and deploy these advanced AI systems.

Why is multi-modal AI important for modern businesses?

It improves accuracy, context understanding, and real-time decision-making. Multi-modal systems enhance automation, customer support, content creation, and operational intelligence. App Maisters uses these capabilities to create tailored solutions for every industry.

What are the real-world examples of multi-modal AI?

Popular examples include smart assistants, security monitoring, healthcare diagnostics, retail automation, and voice-enabled apps with computer vision. App Maisters integrates all three modalities to deliver practical and scalable AI solutions.

How do I integrate multi-modal AI into my existing systems?

Start with a clear goal, ensure strong datasets, and use modular AI tools. A structured integration plan and AI code review help avoid risks. App Maisters guides organizations through every phase of multi-modal AI integration.

What is the difference between text AI, voice AI, and computer vision?

Text AI processes written information, voice AI understands speech, and computer vision analyzes images or videos. Multi-modal AI merges them, creating smarter and more intuitive systems. App Maisters builds solutions that leverage all three.

Are multi-modal AI systems better than single-modal AI?

Yes. Multi-modal AI delivers higher accuracy, deeper context, and more natural interactions than systems that use only text, voice, or vision. App Maisters helps clients upgrade from single-modal tools to advanced multi-modal models.

What industries benefit the most from multi-modal AI?

Healthcare, government, retail, finance, logistics, and manufacturing see huge gains. These industries rely on combined text, voice, and vision to automate tasks and improve outcomes. App Maisters provides custom multi-modal AI development for each sector.